Clever Hans and the Uncanny Valley

In 2001, Rob Malda reacted to the first iPod with, "No wireless. Less space than a nomad. Lame." This might be the worst hot-take in the history of tech, but I wonder if it'll be dethroned by the reactions to Apple's AI offerings on Monday.

I have very low expectations, and not because Apple lacks the resources to hop on the latest AI fad. I know that Apple focuses on technology that solves real-world problems. This may surprise you if you spend too much time on Twitter, where the same people who hyped crypto in the late 2010s have moved onto AI, but I think there's still a huge gap between AI potential and products that serve Apple's mainstream audience.

Let's look at AI today, and reasonable expectations for products in 2024.

ChatGPT

It's impressive that you can now ask a computer a question and get back human-sounding results. When a non-technical person tries it for the first time, too many believe the software actually thinks, like the AI they see in the movies. Yikes.

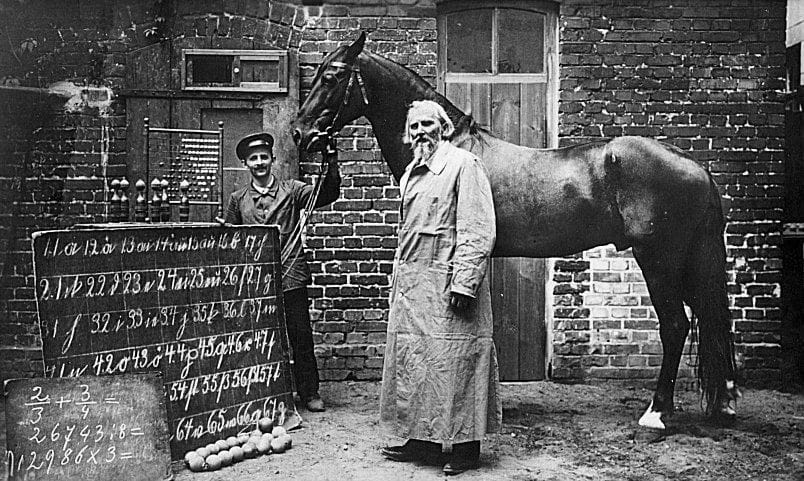

ChatGPT is like Clever Hans. In the early 20th century, there was a lot of hype around smart animals thanks to Charles Darwin, and an elementary school math teacher became a minor celebrity with his horse that learned math.

Hans was said to have been taught to add, subtract, multiply, divide, work with fractions, tell time, keep track of the calendar, differentiate between musical tones, and read, spell, and understand German. Von Osten would ask Hans, "If the eighth day of the month comes on a Tuesday, what is the date of the following Friday?" Hans would answer by tapping his hoof eleven times. Questions could be asked both orally and in written form.

In reality, Hans had no idea what it was doing. Horses are good at watching people's reactions, and the people asking questions had a "tell" when Hans tapped the right answer. Hans wasn't trying to fool people, he just knew he'd be praised and rewarded if he stopped tapping his hoof when, say, the questioner slightly smiled. Hans' answers were only correct if the person asking the question already knew the answer.

When you type into your iPhone and its keyboard suggests the next word, your phone is a Clever Hans. It doesn't understand the meaning behind your sentence. It just knows that when a sentence starts with "I love to drink," the word "coffee" gets a better reaction than "bleech." ChatGPT is a complex iPhone autocomplete, where instead of single words, it takes sentences and spits out paragraphs.

The problem is that ChatGPT is often right, and sometimes makes things up. (The technical term is "hallucinate," the same way you might call a plane crash a controlled flight into terrain.) ChatGPT faces the same pitfalls as self-driving cars that occasionally try to murder the driver. When you have a system that works flawlessly 99% of the time and fails catastrophically 1% of the time, you end up with idiots riding home drunk in the back seat of the car.

At least self-driving cars now force people to pay attention the whole time, but what can you do with ChatGPT, beyond adding a warning label? AI companies make things harder by warning of the wrong types of things; when they talk about the risks of AI safety, they want you to think about a system so powerful it could destroy humanity, like Skynet or AM. They don't want to talk about that idiot who sold a mushroom identification app that would kill you.

If Siri gets a ChatGPT-like functionality, it hinges on Apple finding a way to make AI not tell you to put glue on your pizza. If every Siri response includes a weak, "Always verify these facts for yourself," why not just skip to Google? If it omits the warnings without solving the problem, they're in for a world of class action lawsuits.

Today's AI is not good at giving you trustworthy results, but it's probably fine for your email app to summarize a thousand-word email into a sentence or two.

The Dead Eyes of Generative AI

Many people use AI to write their school essays, or content for a corporate blog. AI is good at regurgitating other people's ideas, and that's most of what that type of writing calls for. It raises a meta question of why we waste so much of our lives outputting drivel, but it is what it is.

If we hold up AI content to good writing and evaluate it critically, it's as painful to look at as a computer-generated human from the 90's.

AI lacks a human perspective, but it's about more than how it was written by a machine— it was pulled from more than a single human. It is the average of millions of pages of text written by millions of people, with no one single person behind the wheel. There is no nuance. There is no flair. You can ask it to inject jokes, but the jokes are lame. It lacks an overarching structure, patterns, and callbacks. Yikes.

It's much more reasonable to pitch an AI a product that handles writing boilerplate in trivial emails. I like writing, but get no joy from the boilerplate "I hope his email finds you well" when I just want to ask my accountant if I can write off my dry cleaning. Please AI, save me from small talk.

When it comes to imagery, my expectations are rock bottom. I would rather watch two hours of the worst CG from the 90's than five minute of today's AI slop.

The first AI generated movie.

I like Dan Olsen's comparison of generative AI as a slot machine where you just keep putting in quarters until you get a good result. Now what do those results look like? Just browse the trash that overruns Facebook.

Today's generative art all looks the same. The lighting is wrong. The composition is too textbook. The faces look uncanny.

AI proponents tell you that you just need the right prompt, but to articulate that, you need to understand what's wrong with an image, and most people lack training in the fine arts.

If I had to build a generative image product using today's technology, I'd constrain it to something unrealistic. The rumors of "generative Memoji" fit the bill, but what else?

Kids of the 90s may remember clip art, stock illustrations that you could paste into your word processor documents to add a little personality.

I could see value in generative clip art. It'd be cool to see a sample illustration and be, "That's perfect, but could that be a cat instead of a turtle?" You'll never hang this in a museum, but I bet it could be good enough for a school newsletter.

Apple is one of the few companies in the world that doesn't need to steal other people's artwork to make this. They have enough money and resources to hand-curate a million sample images without any naughty bits or public figures, and they can pay artists for the rights to use the data.

Rotoscoping as a Case Study

In the old days, artists spent hours hand-drawing a few seconds of animation.

By the 1990s, studios outsourced much of their animation; in the case of The Simpsons, directors sent storyboards to animators in South Korea to just fill in the blanks. With the adoption of computers to further speed up the process, with computers handling the interpretation between individual frames.

Computers are great at cutting out the rote work, but it does come at a cost. In the case of animation, there's been criticism over the CalArts Style, a style that favors thin linework and simple shapes that make computer animation easier. As a society, we gained more animation in total, at the cost of an Akira.

The one area where nobody seems to complain about AI in art is rotoscoping, the tedious job of tracing actors in images.

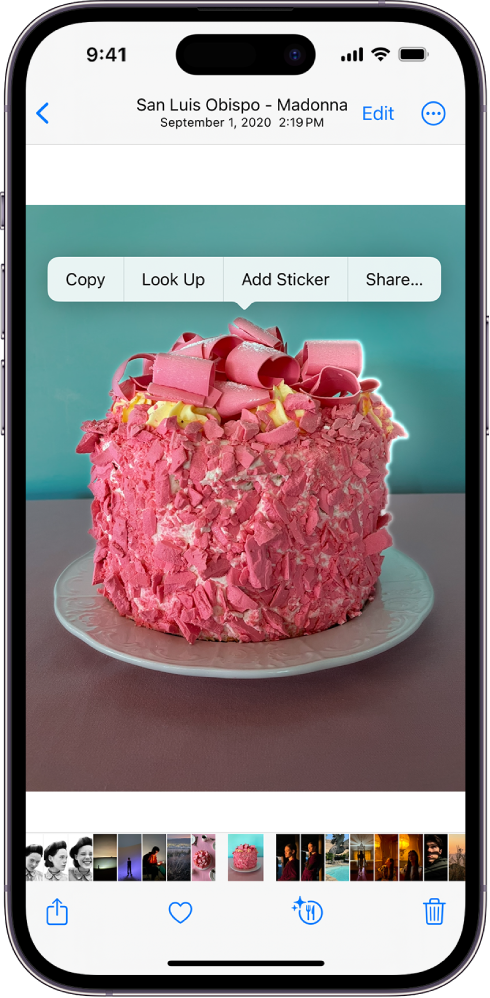

Did you know Apple made this a mainstream product two years ago? They didn't call it rotoscoping. It was Object Lifting.

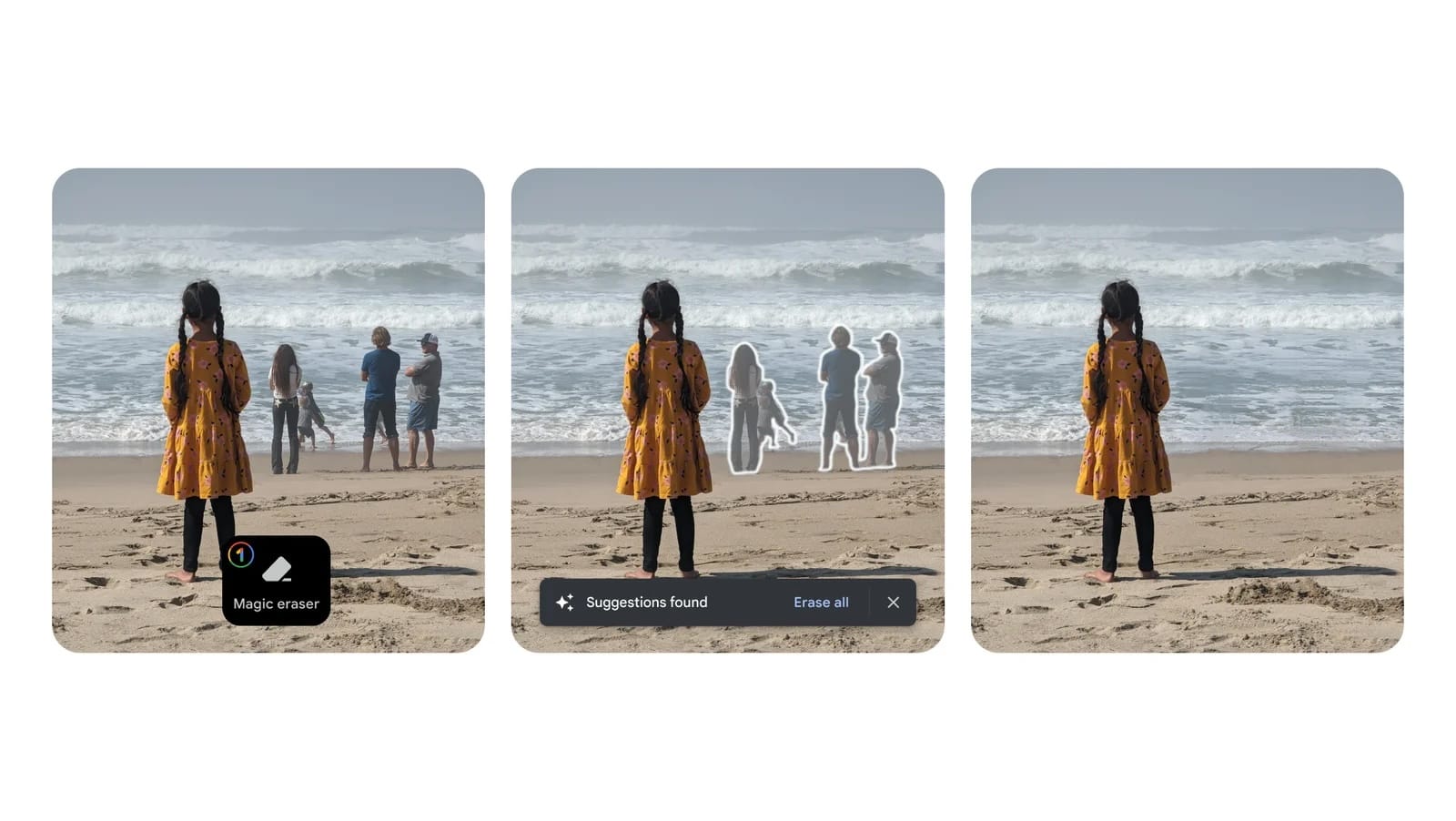

With generative AI, it's possible to push this even further with "inpainting." Instead of just copying the object, you remove it, by asking AI to guess what would go in that spot if you deleted the object. Google introduced their version of this with Magic Eraser in 2023, and I have trouble thinking Apple is far behind.

Going back to the first iPod, Rob Malda wasn't entirely wrong about the iPod; without the iTunes Store, it was just a weaker Nomad. The killer feature of the iPod wasn't the number of songs you could fit on the device, but the ability to get songs on the device in the first place. Sure, you could rip CDs, but the iPod really took off with the launch of the iTunes Music Store in 2003.

Without object removal, the "object lifting" a subject into a sticker is just a gimmick. When I look toward WWDC, I don't judge technology by what's announced today. I ask how this lays the groundwork for features in two years.